Hello readers. Here I am, bringing you more interesting tutorials in Python. Today we take a look at Python speech recognition!

I will be working on Google Colab.

Initial imports

We’ll initially need to install some additional libraries:

!pip install SpeechRecognition !pip install pyaudio !pip install ffmpeg-python

You may have to install some additional dependencies based on your system, so just follow the warnings.

Next import all the necessary libraries:

from IPython.display import HTML, Audio from google.colab.output import eval_js from base64 import b64decode import numpy as np from scipy.io.wavfile import read as wav_read import io import ffmpeg

Next, we’ll have to read the microphone input. I had to make a small javascript snippet for Google Colab.

If you’re working on your system, then no worries.

AUDIO_HTML = """

<script>

var my_div = document.createElement("DIV");

var my_p = document.createElement("P");

var my_btn = document.createElement("BUTTON");

var t = document.createTextNode("Press to start recording");

my_btn.appendChild(t);

//my_p.appendChild(my_btn);

my_div.appendChild(my_btn);

document.body.appendChild(my_div);

var base64data = 0;

var reader;

var recorder, gumStream;

var recordButton = my_btn;

var handleSuccess = function(stream) {

gumStream = stream;

var options = {

//bitsPerSecond: 8000, //chrome seems to ignore, always 48k

mimeType : 'audio/webm;codecs=opus'

//mimeType : 'audio/webm;codecs=pcm'

};

//recorder = new MediaRecorder(stream, options);

recorder = new MediaRecorder(stream);

recorder.ondataavailable = function(e) {

var url = URL.createObjectURL(e.data);

var preview = document.createElement('audio');

preview.controls = true;

preview.src = url;

document.body.appendChild(preview);

reader = new FileReader();

reader.readAsDataURL(e.data);

reader.onloadend = function() {

base64data = reader.result;

//console.log("Inside FileReader:" + base64data);

}

};

recorder.start();

};

recordButton.innerText = "Recording... press to stop";

navigator.mediaDevices.getUserMedia({audio: true}).then(handleSuccess);

function toggleRecording() {

if (recorder && recorder.state == "recording") {

recorder.stop();

gumStream.getAudioTracks()[0].stop();

recordButton.innerText = "Saving the recording... pls wait!"

}

}

// https://stackoverflow.com/a/951057

function sleep(ms) {

return new Promise(resolve => setTimeout(resolve, ms));

}

var data = new Promise(resolve=>{

//recordButton.addEventListener("click", toggleRecording);

recordButton.onclick = ()=>{

toggleRecording()

sleep(2000).then(() => {

// wait 2000ms for the data to be available...

// ideally this should use something like await...

//console.log("Inside data:" + base64data)

resolve(base64data.toString())

});

}

});

</script>

"""This will convert our file to base64 format and store it.

Next, we can create a function that will use this JS script to capture our microphone input.

def get_audio():

display(HTML(AUDIO_HTML))

data = eval_js("data")

binary = b64decode(data.split(',')[1])

process = (ffmpeg

.input('pipe:0')

.output('pipe:1', format='wav')

.run_async(pipe_stdin=True, pipe_stdout=True, pipe_stderr=True, quiet=True, overwrite_output=True)

)

output, err = process.communicate(input=binary)

riff_chunk_size = len(output) - 8

# Break up the chunk size into four bytes, held in b.

q = riff_chunk_size

b = []

for i in range(4):

q, r = divmod(q, 256)

b.append(r)

# Replace bytes 4:8 in proc.stdout with the actual size of the RIFF chunk.

riff = output[:4] + bytes(b) + output[8:]

sr, audio = wav_read(io.BytesIO(riff))

return audio, srThen we can simply run:

audio, sr = get_audio()

and give access to the microphone when prompted.

It will start recording, and when you’re done press stop.

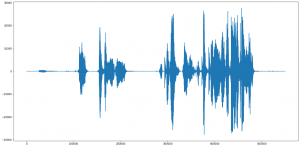

We can view the waveform of our audio file as:

import matplotlib.pyplot as plt plt.figure(figsize=(20,10)) plt.plot(audio) plt.show()

Next, just click on the audio to download it, and then we can perform speech recognition on it !!!

Now we can just import the file and run speech recognition on it, and mine outputs:

“Hey, How are you. Today I want to talk about Speech Recognition.”

Full Code

!pip install SpeechRecognition

!pip install pyaudio

!pip install ffmpeg-python

from IPython.display import HTML, Audio

from google.colab.output import eval_js

from base64 import b64decode

import numpy as np

from scipy.io.wavfile import read as wav_read

import io

import ffmpeg

AUDIO_HTML = """

<script>

var my_div = document.createElement("DIV");

var my_p = document.createElement("P");

var my_btn = document.createElement("BUTTON");

var t = document.createTextNode("Press to start recording");

my_btn.appendChild(t);

//my_p.appendChild(my_btn);

my_div.appendChild(my_btn);

document.body.appendChild(my_div);

var base64data = 0;

var reader;

var recorder, gumStream;

var recordButton = my_btn;

var handleSuccess = function(stream) {

gumStream = stream;

var options = {

//bitsPerSecond: 8000, //chrome seems to ignore, always 48k

mimeType : 'audio/webm;codecs=opus'

//mimeType : 'audio/webm;codecs=pcm'

};

//recorder = new MediaRecorder(stream, options);

recorder = new MediaRecorder(stream);

recorder.ondataavailable = function(e) {

var url = URL.createObjectURL(e.data);

var preview = document.createElement('audio');

preview.controls = true;

preview.src = url;

document.body.appendChild(preview);

reader = new FileReader();

reader.readAsDataURL(e.data);

reader.onloadend = function() {

base64data = reader.result;

//console.log("Inside FileReader:" + base64data);

}

};

recorder.start();

};

recordButton.innerText = "Recording... press to stop";

navigator.mediaDevices.getUserMedia({audio: true}).then(handleSuccess);

function toggleRecording() {

if (recorder && recorder.state == "recording") {

recorder.stop();

gumStream.getAudioTracks()[0].stop();

recordButton.innerText = "Saving the recording... pls wait!"

}

}

// https://stackoverflow.com/a/951057

function sleep(ms) {

return new Promise(resolve => setTimeout(resolve, ms));

}

var data = new Promise(resolve=>{

//recordButton.addEventListener("click", toggleRecording);

recordButton.onclick = ()=>{

toggleRecording()

sleep(2000).then(() => {

// wait 2000ms for the data to be available...

// ideally this should use something like await...

//console.log("Inside data:" + base64data)

resolve(base64data.toString())

});

}

});

</script>

"""

def get_audio():

display(HTML(AUDIO_HTML))

data = eval_js("data")

binary = b64decode(data.split(',')[1])

process = (ffmpeg

.input('pipe:0')

.output('pipe:1', format='wav')

.run_async(pipe_stdin=True, pipe_stdout=True, pipe_stderr=True, quiet=True, overwrite_output=True)

)

output, err = process.communicate(input=binary)

riff_chunk_size = len(output) - 8

# Break up the chunk size into four bytes, held in b.

q = riff_chunk_size

b = []

for i in range(4):

q, r = divmod(q, 256)

b.append(r)

# Replace bytes 4:8 in proc.stdout with the actual size of the RIFF chunk.

riff = output[:4] + bytes(b) + output[8:]

sr, audio = wav_read(io.BytesIO(riff))

return audio, sr

audio, sr = get_audio()

import matplotlib.pyplot as plt

plt.figure(figsize=(20,10))

plt.plot(audio)

plt.show()

import speech_recognition as sr

r = sr.Recognizer()

AUDIO_FILE = ("/content/audio.wav")

with sr.AudioFile(AUDIO_FILE) as source:

#reads the audio file. Here we use record instead of

#listen

audio = r.record(source)

r.recognize_google(audio)Ending Note

If you liked reading this article and want to read more, continue to follow the site! We have a lot of interesting articles upcoming in the near future.