This article will help you design an eye-tracking neural network in Python on your own.

There have been many new deep neural networks in recent years. But due to a large number of deep network layers, their training takes a long time and requires a large dataset.

But this is a thing of the past per se. Most of the tasks can use trained deep neural network models to make further training easy.

Some of the well-known deep networks such as YoloV3, SSD, etc detect and track multiple objects, so their weights are high and the total precision is poor for a particular mission.

Eye-tracking activities in a given area need to locate only one object – an iris.

It is rational, therefore, to use a neural network only for this mission. But the issue is the lack of sufficient datasets for the model’s preparation.

Preparing The Eye Tracking Dataset

The dataset where I got the inspiration to write this article is: https://www.kaggle.com/ildaron/dataset-eyetracking/tasks

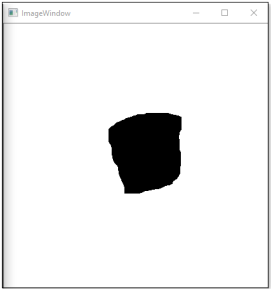

The procedure we are looking at today is detecting the eye motion solely on the basis of the iris.

- The model of the neural network must have high iris recognition accuracy.

- The color of each person’s eyes is unique, so the neural network should concentrate on characteristics that are not directly related to color since it is not possible to train all possible colors in the network.

Structure of the Eye Tracking Neural Network

As we mentioned before, this article is going to help you implement the eye tracking neural network in Python completely on your own. If I show you how to do it right from scratch, you will not learn anything new here.

So, if you need to understand the basics of building a neural network, go through these short articles. These articles are easy to follow and very quick reads. Once done, continue right where you left off here!

- Python TensorFlow Tutorial

- Keras Deep Learning Tutorial

- Max Pooling in Python – A Brief Introduction

- Overfitting – What is it and How to Avoid Overfitting a model?

- Single Perceptron Neural Network

The structure of our neural network will be as follows:

We are using six convolution layers along with max-pooling. Then we flatten and use dropout to avoid overfitting.

How to Track Features of the Eye?

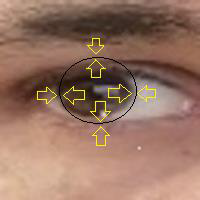

As you can see from the picture above, the input of the neural network is a matrix of RGB values, and the direction arrors determine dark-to-light in the image.

This procedure is the same for input images of various sizes, so as to capture features in all sizes.

Also, the process continues to remain the same as our face recognition implementation.

Do you remember what this process is called? Take some time…don’t scroll down until you have an answer.

Let me illustrate the process here:

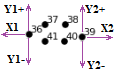

If your answer to my question was Histogram of Oriented Gradients (HOG), you’re absolutely correct!

Visualizing the

The complete procedure for eye feature tracking is as below:

Further

The eye-tracking mechanism can also be used for video footage, subdividing frames, and then tracking eye movement. This can be used in a variety of ways, like attention and child psychology.

Compared with current state-of-the-art event detection algorithms, we conclude that machine-learning techniques lead to superior detection and can achieve manual coding efficiency.

We give an example where the approach is used in an eye movement-driven biometric application in an attempt to demonstrate practical utility of the proposed method for applications that use eye movement classification algorithms.

Ending Note

Detection of events is a difficult stage in data analysis of eye movement.

A major disadvantage of existing methods of event detection is that parameters have to be modified depending on the quality of data for eye movement.

Here, we demonstrate that using a deep-learning approach, a completely automatic classification of raw gaze samples as belonging to fixations, saccades, or other oculomotor events can be achieved.

If you liked reading this article and want to read more, continue to follow codegigs. Stay tuned for many such interesting articles in the coming few days!

Happy learning! 🙂