What is Parameter and hyperparameter in Machine learning

Let’s find out what are parameters

Well, Parameters are nothing but the model parameters.

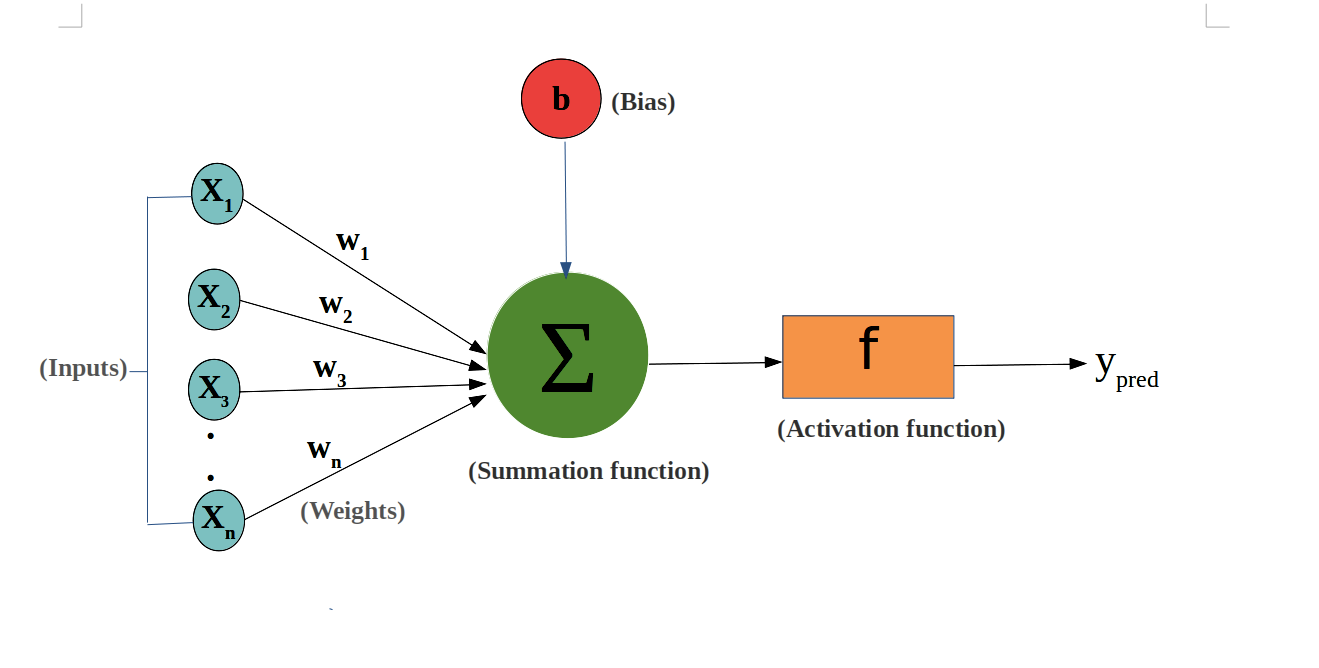

What are the model parameters? well model parameters are something for example weights and biases of neural network

Parameters in a machine learning model are the parameters Whose values are updated during training using some optimisation procedure()

Let’s talk about hyperparameters

Hyperparameters are basically the parameters which are related to the training or learning of the algorithm

For example

- learning rate

- batch size (all the data at once, mini batch, single data point a time )

- number of epochs

Hyperparameters in a machine learning model are the parameters whose values are decided before training of the model begins. Hyperparameters are not affected (do not change) by training. Rather, these affect the quality and speed of the training. So in a sense these parameters are over and beyond or above (hyper -) the process of training and hence called hyperparameters.

Hyperparameters can be related to model selection, e.g., model type , model architecture, or learning algorithm , e.g., learning rate , batch size, or number of epochs

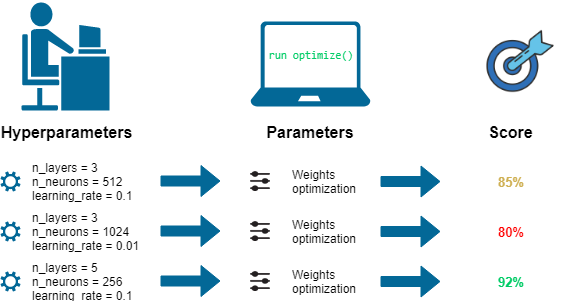

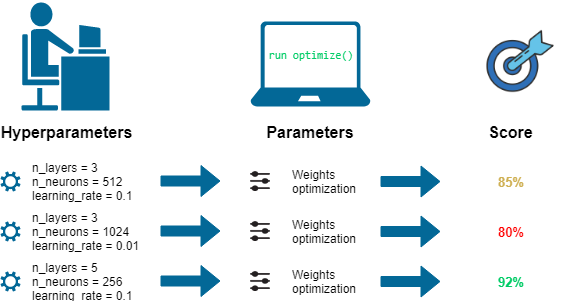

There is no efficient algorithm to select optimal (best) values of hyperparameters. So optimal values of hyper parameters are determined using a trial-and-error process by adjusting or adapting their values for a particular machine learning task. This process of determining values of hyperparameters is called hyperparameter tuning.

Hyperparameter tuning is very similar to tuning the knobs of a guitar. Here one adjusts the tension of a string by adjusting the knobs until it gets the correct note.

Similarly In hyperparameter tuning we keep doing the trial with different hyperparameter values against several learning algorithms until we get the minimal error against a validation set